Skeletons In My Closet

(or in my case, Diners In The Background, aka the Case of the Faceless Man)…

With the recent global pandemic, working from home means parking myself at my (messy) workbench, work laptop tucked under my triple monitor set-up… that, due to my small apartment, is actually in my dining area (with the dining table at my back). This means I (usually) do not enable my video/webcam feed during virtual meetings/teleconferences, mainly to

- retain some modicum of privacy for the rest of my household

- avoid the extremely distracting situation of having people walking past behind me (and given that my webcam is perched on top of my monitor that has been raised to eye height and therefore is pointing downwards, means headless torsos traversing stage left to right or vice versa)

- avoid having some person/s sitting down at the dining table behind me, eating or whatever

Unfortunately, forced to show my ugly mug via video/webcam feed during work calls, due to guilt for not doing so while everyone else was, or because of conducting customer training, I had to scramble to find a solution to “hide” my background.

You Feel Sense Me?

I immediately thought of depth-sensing cameras, hopeful that the days of the Xbox Kinect have been left behind in the name of maturity… A full day of researching depth-sensing capable cameras came up with disappointing results, however.

The Intel D435 is the only widely available retail webcam considered “current”, with everything else, like the Intel SR305 and similar third-party Intel SR300-based products (like the Creative BlasterX Senz3D and the Razer Stargazer) currently relegated as “discontinued”/”unsupported” (due to the latest Intel SDK dropping support for SR300) or “limited support” (i.e. using only the normal, 2D RGB sensor, like any other normal HD/FHD webcam)

Countless other pages I forgot to bookmark/note down simply distilled into the single fact that dabbling with depth sensing cameras was a hit-or-miss affair, with actual software support not universal, despite Intel’s SDK having being already been provided… Maybe if someone can write a “tween” application (here or here) that would create a fake green screen that (most) other “normal” software with native support for green screens (like OBS and Zoom) could use, this may be the ideal (compared to what follows below)…

If you have to ask why: some of the solutions proffered still pick up persons moving behind me, and often incorrectly, resulting in some disembodied torso crossing behind me; the use of depth sensing would result in picking up only me, assuming some ability to control/select the distance/depth…

Since I did not want to spend money on something that may work (but most likely not), that brought me back to the “standard” webcam + “human shape/form sensing” and “‘smart‘ background removal”…

I tested several options I could find, namely:

- XSplit VCam

- CyberLink PerfectCam

- Snap Inc’s (of Snapchat fame) Snap Camera

One key point for me, which may not be applicable to you, is that I needed the software to work with BlueJeans, the “video conferencing application of choice” for work.

2021/07/01 Update: With my work moving over from BlueJeans to Zoom (citing the latter is more commonly found everywhere, probably due to their offering of a “free” tier), this is no longer a requirement. Zoom itself had introduced non-green screen background replacement shortly after this article was written also, and to date, it’s outline detection appears to equal or surpass Snap Chat’s.

2020/07/13 Update: BlueJeans surprised me today with an updated version, with a new “background” feature ala Zoom’s virtual background feature (i.e. not requiring chroma-keying/green screen)…

The good: less CPU intensive, less lag than routing through Snap Camera (as expected).

The bad: Shape/person object detection is still wanting, in comparison with Snap Camera (better) or even PerfectCam’s (best) shape detection – persons walking in my background still “appear” in BlueJeans as compared to the other two, despite the rather large distance separation.

Jump past the break to see what I eventually use now…

Failure To Launch

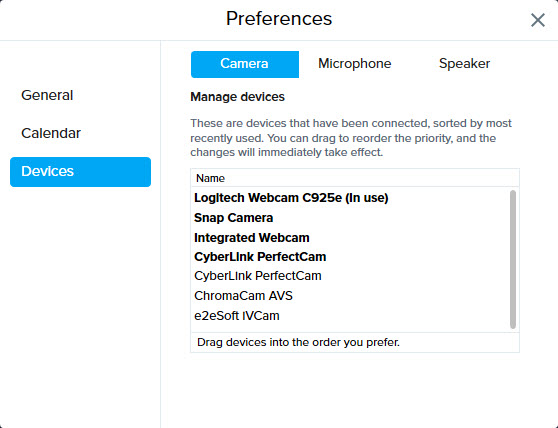

Unfortunately, XSplit VCam failed immediately, not being able to be detected by BlueJeans (although I am also unsure why PerfectCam appears twice):

Nearly Perfect

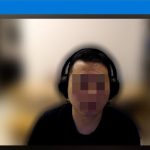

CyberLink PerfectCam was… nearly perfect, showing a clear outline between the “detected form” (i.e. me) and the background, correctly detecting my headphones also… It appears their touted “AI recognition” appears to work… Although it does falter if I wave my hand though…

They do provide the ability to do a “basic” blur of your background to varying degrees of your choosing, or simply attempting to replace your background with one of three provided images, with an option to use your own… Unknown is if a movie or animated image (APNG/GIF) may be used instead…

Changes In A Snap

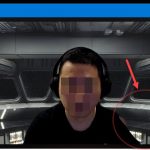

Up next was Snap Inc.’s Snap Camera… The edge detection was not as good as PerfectCam’s, at times missing parts of my headphones, other times totally amputating parts of me… and it, too, faltered in the hand wave test (the 2nd last picture below – showing just a smitten of my thumb of my raised hand)… but it did provide for loads of “lenses”… all for free (for now?)… It even allows animated backgrounds and overlays…

The Chosen One

Given limited time, I easily settled on the Snap Camera – if not for the multitude of “lenses” (anything from a simple blur to my current go-to, a “Star Destroyer” background), then definitely for the (currently) unbeatable price of “free” (compared to PerfectCam’s USD50 per year subscription).

I also note that neither fared well if I placed the camera off-center (i.e. where your face is at an angle), although I do not have screen captures showing these…

Other Tips

If faced with a similar situation like myself, e.g. a non-static, non-office background, then I would advise:

- a static picture than just a blurred one – large bodies of movement (i.e. a person walking behind you nearby) is still distracting (compared to an office environment where movement is likely to be further away)

- if possible, use of drastically different colours from background to clothes, hair and skin colour (and headphones, if relevant)

Note that sometimes, Snap Camera actually shows some “portions” of that moving body, either a clear spot on a blurred background, or suddenly briefly teleported into existence in front of your chosen background. I did not have a chance to test this with PerfectCam.

Because of this, your best bet to deal with distracting/moving backgrounds may be simply to have a green/blue screen, or a huge whiteboard, or a curtain behind you…

Either that or someone builds that software “layer” for Intel’s D435 to enable a “fake” green screen to allow use with chroma-keying-capable software…